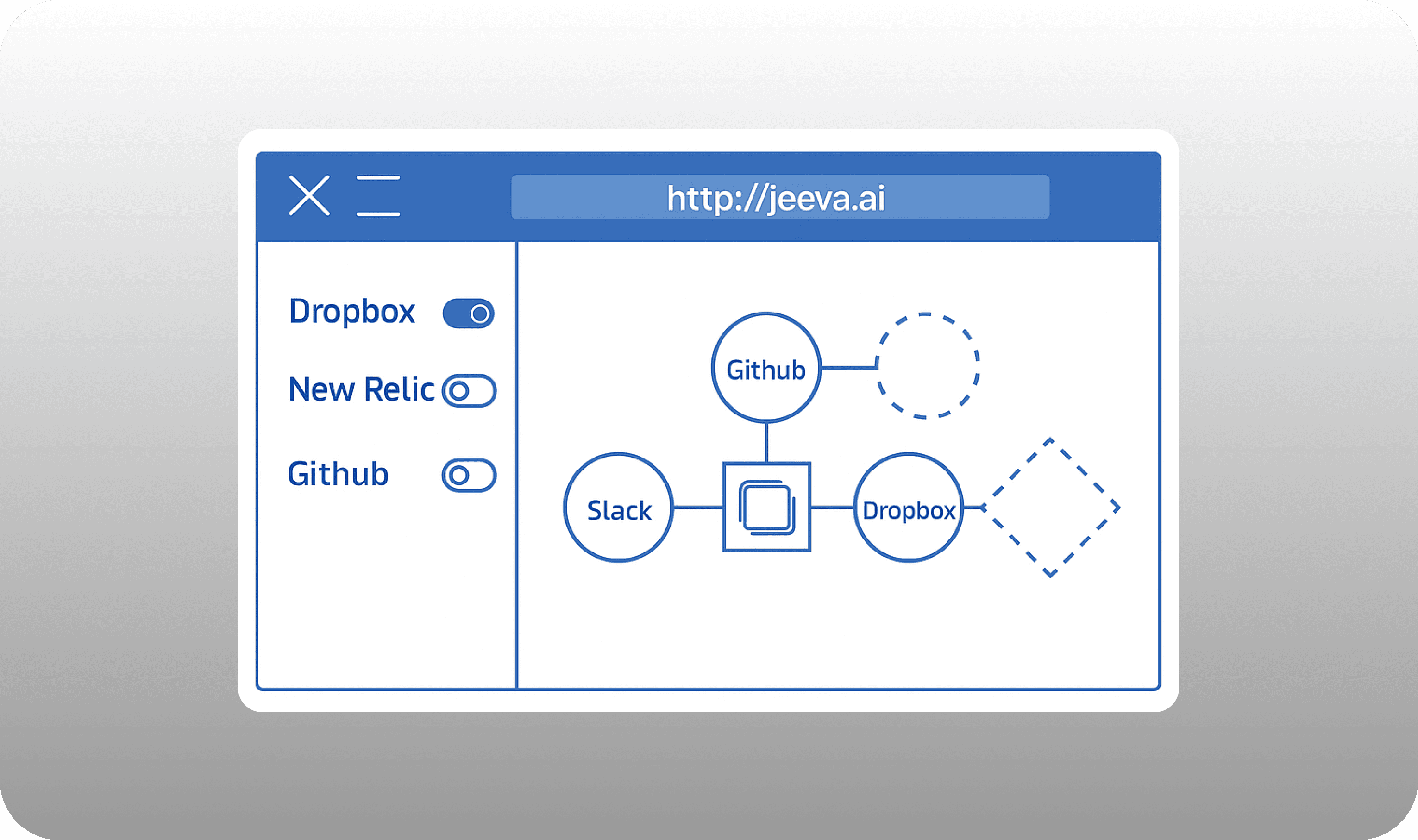

Integrations & Data-Portability Architecture

Policy-controlled, signed APIs plug Jeeva into any stack and let customers export or erase every byte.

What is Integrations & Data-Portability Architecture

Jeeva AI’s integration layer is a policy-governed fabric of signed APIs, real-time event streams and schema-versioned export jobs. It links the platform to CRMs, marketing-automation suites, enrichment feeds, telephony systems, analytics warehouses and any bespoke application a customer elects to connect. Crucially, the same layer provides a standards-based exit route: anything that enters Jeeva AI can be exported again—either continuously for operational syncs or in scheduled batches for archival and business-intelligence use—without proprietary constraints, in line with the Data Protection, Data Classification and Data Deletion policies.

Why it matters

Revenue teams depend on timely, bidirectional data flow, while security, legal and finance teams scrutinise every third-party hop for encryption strength, residency guarantees and hidden cost. By embedding portability and governance into the integration architecture, Jeeva AI satisfies the risk, continuity and data-subject-rights mandates laid out in the Vendor Management Policy, Encryption & Key-Management Policy and the UK/EEA Cross-Border Transfer Procedure.

Vendor due-diligence & onboarding

Before a single webhook is enabled, every prospective connector travels through the formal Third-Party Risk Monitoring workflow. A risk tier (low, medium, high) is assigned using the criteria in the policy packet; high-tier vendors must complete the Vendor Assessment Questionnaire, provide recent SOC 2 or ISO 27001 attestations and accept contractual clauses on encryption, breach notification and audit rights. Integration code is merged only after Operations records an approved assessment and the relevant contract is countersigned.

Code-defined data contracts

Each connector publishes an OpenAPI or GraphQL schema that lives in version control. Continuous-integration jobs compile the schema, run backward-compatibility tests and fail the build if a vendor makes an unannounced breaking change. Because downstream services read from the contract rather than hard-coded keys, customers avoid “silent” failures—a control that maps to the Change-Management Process and the System-Access & Authorization Control Policy.

Secure transport & storage

All ingress and egress traffic is protected with TLS 1.2 or higher, meeting the minimum specified in the Encryption & Key-Management Policy. Data at rest resides in AES-256–encrypted stores under tenant-scoped KMS keys. Customers that enforce PrivateLink or IP-allow-listing can deploy a lightweight relay inside their own VPC, keeping credentials local and pushing only pseudonymised payloads through a mutually authenticated channel.

Streaming for immediacy, batch for bulk

Operational events publish to a managed Kafka bus (or customer-supplied Kinesis/PubSub) within seconds, while cost-sensitive analytics loads are exported nightly to customer-owned S3 buckets or Snowflake stages as compressed, PGP-encrypted Parquet files. Every export file carries field-level data-classification tags so downstream systems can honour masking and retention obligations automatically.

Change control & rollback

A new connector follows the same branch → peer-review → automated-test → canary-rollout path that governs application code. If error rates exceed predefined thresholds, the orchestrator reverts to the prior version in minutes and opens an incident ticket, fulfilling the escalation steps in the Incident-Response Plan.

Deletion, retention & cross-border safeguards

On a data-subject-erasure request, a central privacy service enumerates all related identifiers and invokes each connector’s delete or suppress endpoint. When deletion is infeasible, vendors are contractually bound to tokenise within 30 days and provide audit evidence, reflecting the timelines in the Data Deletion Policy. EU/UK data stay in-region where possible; otherwise SCCs or the UK IDTA are executed and a Transfer-Impact Assessment is filed, as required by the cross-border-transfer procedure.

Continuous monitoring

A monitoring daemon polls schema hashes, latency and error codes for every live integration. Drift or sustained 5xx spikes trigger a Sev-2 alert and lower the connector’s trust score in the admin console. Monthly summaries feed the Risk-Assessment & Management Programme, ensuring long-term visibility.

Outcome

Customers see integrations that “just work” moments after OAuth approval, yet can export or purge their data on demand, backed by documented controls. Security teams receive contract-level guarantees on encryption, incident reporting and audit access; operations keeps a single, code-driven pipeline; and auditors get immutable evidence that every byte moved through a vetted, reversible path—fully aligned with the Jeeva AI Company Policy Packet.